do you love challenges?

gain practical skills and a creative mindset for your future

#count%

of all DIT graduates

will find a job

within 2 months

deepen your skills - shape your future

#robotics #smartsensors #smartactuators #AI #artificialintelligence #machinelearning

#dataanalysis #systemdesign #bigdata #deeplearning #autonomoussystems #innovative #englishpostgraduateprogramme

As an undergraduate of mechatronics or other related fields of study, the consecutive, application-oriented master's programme "Artificial Intelligence for Smart Sensors and Actuators" qualifies you as experts for the development and use of intelligent, technical systems of data processing, data analysis and automation. The knowledge transfer on study contents including artificial intelligence, machine learning, innovative sensors/actuators, additionally endows prospective students with the competence to work creatively in research and development departments.

All the important information at a glance: Take a look at this brief introduction to the master's program in Artificial Intelligence for Smart Sensors and Actuators.

fact sheet

Degree: Master of Engineering (M.Eng.)

Duration: 3 semesters (1.5 years)

ECTS points: 90

Start: 1st October (winter semester) and 15th March (summer semester)

Location: Cham

Language of Instruction: English

Application Period:

- Entry in October (winter semester): 15 April - 15 June

- Entry in March (summer semester): 01 October - 01 December

Admission Requirements:

- Completion of an undergraduate study programme at a domestic or foreign university with a minimum of 210 ECTS credits in mechatronics or another related study course or a degree that is equivalent to such a university degree. Based on the documents submitted, the DIT examination board decides on the equivalence of the degrees.

- For this reason, applicants having obtained their academic training (e.g., undergraduate degree) in non-member states of the Lisbon convention are recommended to submit a GATE or GRE (general) certificate as well as a recognised German language certificate deemed to further substantiate their eligibility for this study programme.

- Additionally, the professional qualification for this study programme might need to be demonstrated in the context of an aptitude assessment. This written exam is based on a range of subject areas relevant for AI for Smart Sensors and Actuators, such as mathematics, physics, electronics & electrical engineering, system theory, control engineering and computer science. The exam is being conducted both online and on-site at the Campus Cham affiliated to the Deggendorf Institute of Technology. This exam is consulted to determine the major-specific eligibility and ultimately decides on the admission to this master’s programme.

Concerning all applications for the winter semester:

- For all applications received from 15th April to 15th June, the online admission test is scheduled shortly upon the end of the application period.

- The date of the online admission test cannot be chosen individually. Instead, the test date will be pre-defined by the examination board within the invitation email.

- Dependent on the date we received your application, applicants will either receive their invitation email in the middle or by the end of the application period.

Concerning all applications for the summer semester:

- For all applications received from 1st October to 1st December, the online admission test is scheduled shortly upon the end of the application period.

- The date of the online admission test cannot be chosen individually. Instead, the test date will be pre-defined by the examination board within the invitation email.

- Dependent on the date we received your application, applicants will either receive their invitation email in the middle or by the end of the application period.

Language Requirements:

- If German is not your native language, proof of sufficient German skills is necessary.

- If English is not your native language, proof of sufficient English skills is necessary.

- The admission requirements are stipulated in the study and examination regulations (§3 Qualification for the programme, § 4 Proof of ECTS credits not yet obtained, § 5 Modules and proof of performance).

Fees:

- No tuition fees, only student union fee

- International students from non-EU/EEA countries are required to pay service fees for each semester. Click here to read about our service fees.

Enquiries:

- Course enquiries about the study programme "Artificial Intelligence for Smart Sensors and Actuators" at the teaching location Cham: studium-cham@th-deg.de

- Information about the aptitude assessment: master-mss-exam@th-deg.de

- For general enquiries about studying at DIT: contact our prospective student advisors or email welcome@th-deg.de

Since completing my bachelor's degree, I’ve always wanted to dive deeper into the topic of building my own robot. During my master’s program, I learned more than I expected – from how to simulate my robot and design its behavior, to how to communicate between IoT protocols. The opportunities in companies are vast, as we’ve gained knowledge in various fields that we can apply. The challenge today is how to integrate new creations into private networks and ensure the system's efficiency.

Raquel, Student Master Artificial Intelligence for Smart Sensors and Actuators

Do you have questions about the degree program or student life at DIT? Then feel free to contact Raquel or one of our Student Ambassadors directly.

course objectives

The command of intelligent sensor and actuator systems requires scientific-technical expert training tailored to current thematic challenges. Within three study semesters, these challenges are to be met by the structured and intensified knowledge transfer of the following topics:

- Process of Machine Learning (neuronal networks)

- Embedded Control for Smart Sensors and Actuators

- Sensor Technology (e.g. MEMS)

- Methods of System Networking (wired and wireless communication)

- Methods of Data Processing (e.g. Cloud Computing, Big Data)

- System Design

The practical relevance of the course content is implemented through case studies conducted in collaboration with industrial experts.

The Technology Campus Cham (focusing on research & development in mechatronic systems) as well as the Digital Start-Up Centre (majoring in Digital Production) are directly located on Campus Cham. They provide a specialised and application-oriented environment for highly innovative training concepts.

career prospects

Artificial Intelligence (AI) describes a sub-discipline of computer science that deals with the research of "intelligent" problem-solving behaviour and the creation of "intelligent" computer systems. In a multitude of technical fields of application, AI-based systems are fed by sensor data and return process-influencing information to actuators. The interactions between information processing, the process as data source and data sink as well as the influences of the quality of the sensor data and the actuator interventions are equally decisive for the overall system function of the systems. In addition to the actual measuring principle for the respective process variable, a smart sensor also features signal pre-processing, monitoring algorithms for safeguarding the sensor function, connectivity (e.g. Bluetooth, WIFI, 5G) and, depending on the application, power supply functions. Smart actuators also supplement the actual control intervention in the technical process with extended signal processing and monitoring mechanisms as well as various communication methods. The resulting signal-processing system features additional "intelligent" properties that further enhance its performance.

After successfully graduating from this study course, you will have all the qualifications required to establish yourself as an expert in this transitioning professional world and to participate actively in its development.

Motivation

In infrastructure projects, an essential requirement is to name the individual project documents according to a standardised procedure, e.g. according to VGB-S832. However, the documents created are not always named correctly according to the specifications. In addition, due to the large number of project documents, there are sometimes discrepancies between the document list and the documents actually created.

With the help of AI, in particular Natural Language Processing (NLP), the management of project documents is to be partially automated so that resources are freed up for other activities.

Project Aims

- Classification of documents

The document classes are to be recognised independently of the document name

The recognition of document classes should be applicable to text documents as well as scanned documents and drawings in German and English - Renaming of files according to VGB-S832, if necessary

- Automatic creation of the document list based on the existing documents and identification of deviations from existing document lists

Approach

- Clean up the existing project documentation, especially clarification of partially wrong classifications and avoidance/reduction of "imbalanced datasets"

- Creation and testing of different classification models with different settings for class recognition

- Selection and use of the best models and implementation of file renaming

- Automatic creation of the deviation list

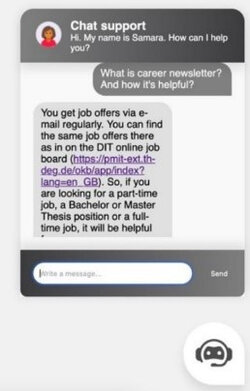

Computer-assisted chatbots are technical dialogue systems based on natural speech recognition and are used to answer user queries automatically and without direct human intervention in real time.

Project Aims

A student project group designed an extensive catalogue of questions about studying at the Cham campus. In cooperation with the respective university departments, the project group defined correct answer patterns for the questions asked and fed them into the system. The technical application recognises the user input, compares the predefined answer patterns and should, for example, help prospective students navigate better through the wealth of information on the website in the future.

Images

- A chatbot dialogue box.

Project Overview

With increasing traffic across the globe and the number of vehicles, traffic supervision has become complex. Artificial intelligence (AI) technology can reduce the complexity as well as increase the throughput.

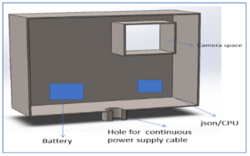

The project “Traffic Supervision System’’ was initiated in the Sensor Lab, Campus Cham. The objective was to build a prototype “Watch box” (Integrating AI Hardware with object detection software). It can monitor the traffic as well as vehicle features in real time. The project plan is to connect a centralized system through cloud direct connect.

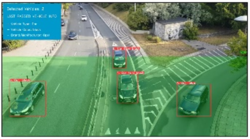

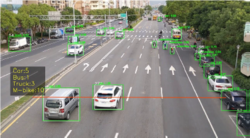

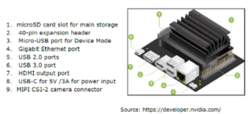

The YOLOv5 object detection model is used for training of vehicle classification and its manufacturer. Deep sort algorithm is used for tracking and counting (figure 1). Figure 2 shows the video capture by the camera. The vehicle characteristics viz type, count, manufacturer and color as well as the time instance has been stored in excel file (Figure 3). The model has been evaluated on the test data with an accuracy of 89%. The AI hardware used are Jetson Nano and camera (Figure 4). The watch box design for the hardware is shown in Figure 5.

Images

- Figure 1: object detection model and the results are stored in Excel file.

- Figure 2: video capture by the camera.

- Figure 3: results stored in Excel file.

- Figure 4: AI hardware used is Jetson Nano and camera.

- Figure 5: watch box design.

Keywords

Traffic Supervision, Artificial Intelligence, Machine learning, YOLO object detection model, Jetson Nano

The project "Vision Tracking" deals with the detection of the direction of gaze on a specific object with simultaneous object recognition. As a preliminary stage to this application, the determination of the direction of gaze and the recognition of the corresponding object was implemented with the help of a convolutional neural network.

Project Aims

Where do our eyes wander to first when shopping for our daily needs, where do they linger the longest and what consequently appeals to us the most on the supermarket shelves? How should a supermarket, for example, optimally position or design its product range in order to appeal more to its customers?

The student is always focused on a pair of scissors, when the focus of vision and scissors are recognised by the neural network.

Images

- The gaze of a student is directed at the scissors here. The direction of gaze and the scissors are recognized by the neural network.

Measurement and visual representation of magnetic fields are often necessary in development and production of products in connection with magnets. Among many others, the following can be mentioned as application examples for a "Magnetic Field Mapper":

- Quality control in the production of permanent magnets or products in which permanent magnets are built in (e.g. speakers)

- Checking and verification of computer models of magnetic fields

- Investigation of the manufacturing stability and aging behavior of magnets used for qualification or in the application for magnetic sensors (example: angle sensors in cars)

The project used a commercially available 3D printer (CTC 16450, Table, Fig. 1) where essentially the extruder unit was replaced by a Hall sensor from Infineon Technologies (Hall sensor TLV493D-A1B6). Above all, the 3D printer offers a sufficiently large installation space to be able to investigate larger magnetic fields in later applications, and at the same time a comparatively good positioning accuracy.

Data of the 3D printer:

| Data Information | Parameter |

|---|---|

| Installation space | 220 x 220 x 240 mm |

| XY axis speed (max.) | 300 mm/s |

| Z axis speed (max.) | 200 mm/s |

| XY positioning accuracy | 0,01 mm |

| Z positioning accuracy | 0,004 mm |

The movements in x, y and z direction are done by stepper motors. An Arduino Mega 2560 microcontroller controls the stepper motors and receives and processes the sensor data.

- Fig. 1: Printer unit

The first application for which the Magnetic Field Mapper is used is the measurement of magnetic fields of diametrically magnetised disc magnets, such as those used for magnetic angle sensors (Fig. 2).

- Abb. 2: Diametrically magnetised disc magnets

For this measurement, a measuring grid of 0.25 mm was set over a field of 14 mm x 14 mm (Fig. 3).

- Fig. 3: Measurement grid and measurement overview

For the visual representation of an x-y measurement at a constant z-value, various display options can be selected. As an example, Fig. 4 shows the course of magnetic field lines in the x-y plane and Fig. 5 the corresponding equipotential curves including a measurement artefact. Figure 6 shows the z-component of the magnetic field directed out of the x-y plane or into the x-y plane.

The Magnetic Field Mapper project will be continued in further project and final work. Examples of improvements to the system would be:

- More flexible graphical representation

- Use of a significantly smaller measurement grid

- Development of a user interface

- Simple exchange of the sensor used

- Fig. 4: Magnetic field lines.

- Fig. 5: Representation of the equipotential lines.

- Fig. 6: Representation of the z-component.

The detection of road damage is essential to maintain road quality for road users. In particular, the detection of the severity of road damage is also important for the authorities to decide where and what to prioritise for repair. In the project "Road Condition Detection", a CNN model for the detection of road damage was created and trained. The project includes a user-friendly interface and the automated creation of a road condition report. The CNN model classifies the condition of the road surface into four categories with two levels of severity. The CNN model further uses images and GPS data from a GoPro dashcam and the weather status as input (Fig. 2).

- Fig. 1: Project overview.

The final dataset is based on a combination of different publicly available datasets with a total of 11,947 images and associated 26,191 labels. By means of augmentation, the training set was increased to over 30,000 images and over 100,000 labels using the online annotation tool Roboflow. Mosaic and cropping augmentation was applied to the entire dataset to increase the number of images from about 10,000 to about 30,000. There was a significantly lower amount of original images of potholes, which is why additional techniques such as colour change, scaling, flipping, translation etc. as well as combinations of these techniques were used (Fig. 2).

- Fig. 2: Augmentation.

For image recognition, the YOLOv5 (You only look once) model was used. YOLOv5 is "open source" and can be used as an efficient starting point for fast and good results. The framework offers five different options. A recognition rate of about 80% was achieved for all classes.

In order to make the road condition recognition as user-friendly as possible, a "user interface" was programmed (Fig. 3).

- Abb. 3: User interface.

After a monitoring run has been completed, the software automatically generates a report on the road condition. The report contains small statistics and shows the roads travelled on a map (fig. 4). In addition, the captured images of the road damages as well as the corresponding exact GPS data are recorded in the report (fig. 5).

- Fig. 4: Report overview.

- Fig. 5: Detailed damage recording.

subject overview

Overview of lectures and courses, SWS (Semesterwochenstunden = weekly hours/semester) and ECTS (European Credit Transfer and Accumulation System) in the postgraduate programme "Artificial Intelligence for Smart Sensors and Actuators".

| 1st Semester | SWS | ECTS |

| AI and Machine Learning | 4 | 5 |

| Advanced Sensor Technology and Functionality | 4 | 5 |

| Model-Based Function Engineering | 4 | 5 |

| Advanced Programming | 4 | 5 |

| Edge Device Architectures | 4 | 5 |

| System Design | 4 | 5 |

| 2nd Semester | SWS | ECTS |

| Deep Learning and Computer Vision | 4 | 5 |

| Big Data | 4 | 5 |

| Case Study Machine Learning and Deep Learning | 4 | 5 |

| Autonomous Systems | 4 | 5 |

| Case Study Edge Device Architectures | 4 | 5 |

| Network Communication | 4 | 5 |

| 3rd Semester | SWS | ECTS |

| Subject-Related Elective Course (FWP) | 4 | 5 |

| Master's Module | - | 25 |

| Master's Thesis | - | 20 |

| Master's Seminar (two parts: Master's colloquium (2 ECTS) and seminar series "Career Start into German Technology Companies") | 2 | 5 |